The AI Maturity Reality Check: What We Heard, What We Learned, and What Comes Next

minutes

Authored by Liliana Lopez-Sandoval

Customer experience leaders came to Customer Contact Week (CCW) Orlando with one big question on their minds: how do we move AI from “promising” to “proven” without sacrificing quality, compliance, or customer trust?

That question came up everywhere, from hallway conversations to standing-room sessions. It also shaped the AI Maturity Workshop that I co-led with Jon Brown (SVP of Client Results), where we walked through a practical AI Maturity Model focused on what matters most right now: operational readiness, measurable outcomes, and the ability to scale responsibly across channels, teams, and regions.

This year’s event brought the CX community together from January 21st to 23rd, 2026, at the JW Marriott Bonnet Creek in Orlando, Florida. We loved getting face time with so many support and operations leaders. Beyond the workshop, the momentum continued throughout the event.

The Liveops team spent the week meeting with leaders across industries, sharing what we are seeing in real programs, and talking through what it takes to operationalize AI in customer experience. If you stopped by the Liveops booth, thank you. Those conversations helped reinforce what we hear consistently: the challenge is rarely a lack of enthusiasm for AI. The challenge is building the systems, governance, and operating model to make it work day after day.

To keep the discussion grounded in reality, we also ran a series of real-time polls during the workshop. The results were honest, consistent, and incredibly useful.

Below is a recap of what the room told us, paired with the poll visuals and a short takeaway for each.

The Biggest Themes We Heard in the Room

1) Most teams are reacting, not adapting in real time

Many organizations can adjust after something breaks, but fewer have built a system that continuously learns and improves without requiring a major lift.

2) Scaling across channels is possible, but it is not effortless

Leaders are finding ways to expand AI beyond a single workflow, but scaling still takes heavy coordination across tools, data, and governance.

3) ROI is expected, but still not consistently visible

There is a strong belief that AI should deliver value. The challenge is proving it with metrics that leaders can trust and repeat.

4) Data, integration, and operating model decisions are the real blockers

The limiting factor is rarely the idea of AI. It is fragmented systems, complex integration work, unclear ownership, and change management friction.

What the Polls Revealed About AI Maturity in CX

To keep the conversation grounded in reality, we ran a series of live polls during our workshop to understand where CX teams truly are on the AI maturity curve.

The results reinforced what many leaders are experiencing firsthand: progress is real, but consistency, scalability, and measurable ROI still depend on the operating model behind the technology.

Below are the poll visuals, each paired with a short takeaway.

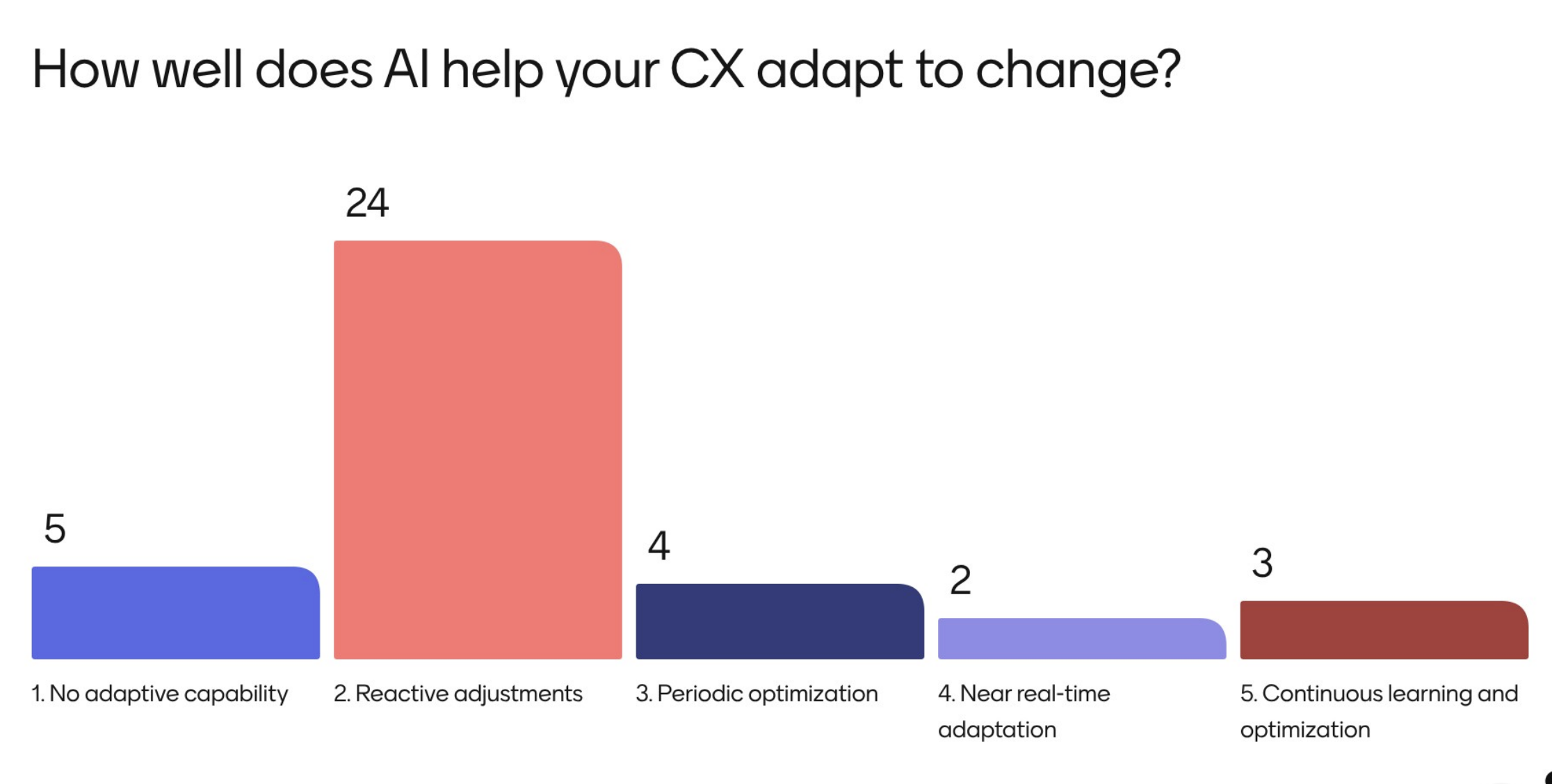

Poll 1: How well does AI help your CX adapt to change?

About 63% of respondents said AI supports reactive adjustments, which suggests many teams are responding faster, but not yet continuously adapting. Only about 13% pointed to near real-time adaptation or continuous learning, signaling a gap between insight and true closed-loop improvement.

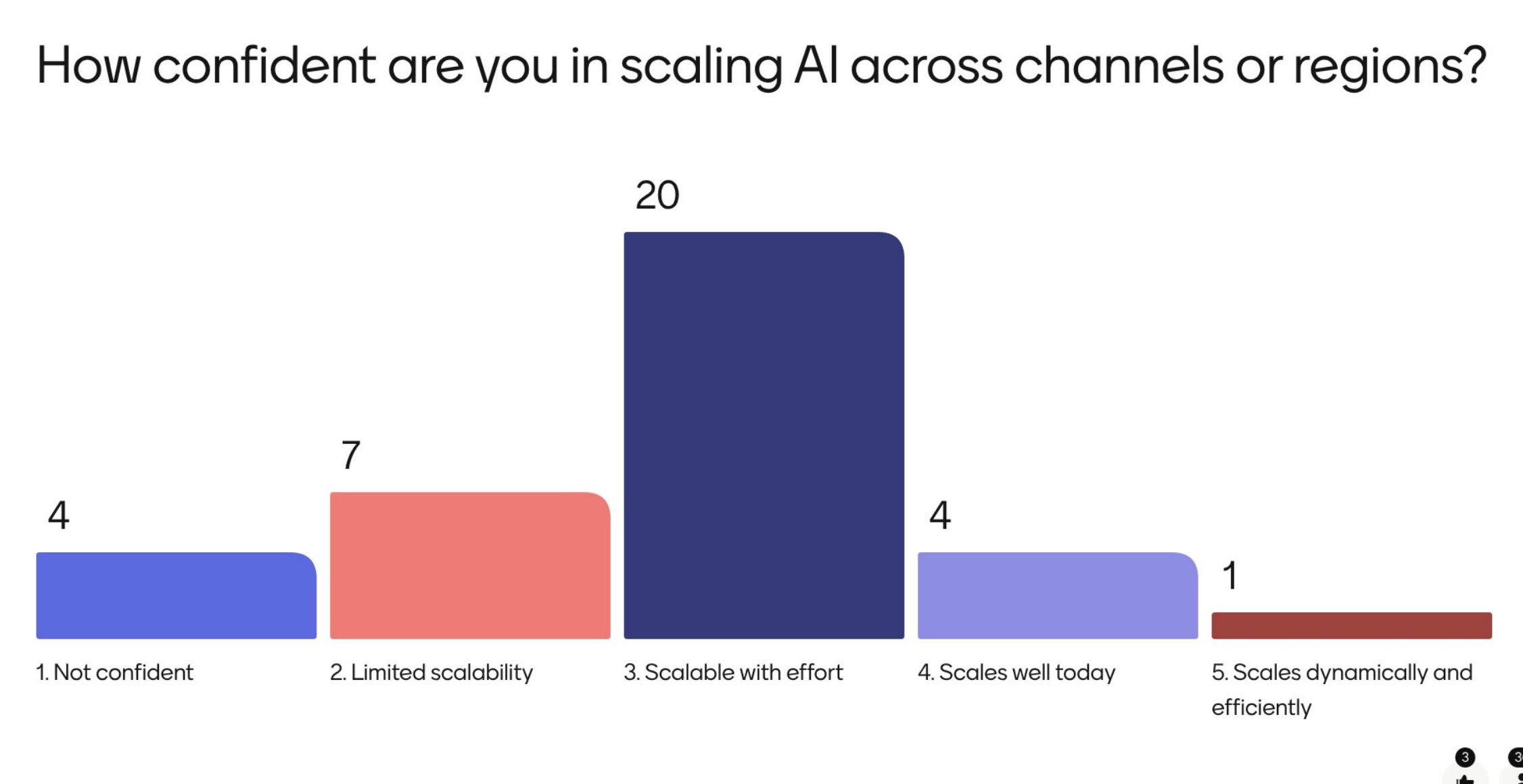

Poll 2: How confident are you in scaling AI across channels or regions?

Roughly 56% reported AI is scalable with effort, which is a realistic, important signal: scaling is possible, but it still requires disciplined rollout, governance, and cross-team alignment. Only about 3% felt they are scaling dynamically and efficiently today.

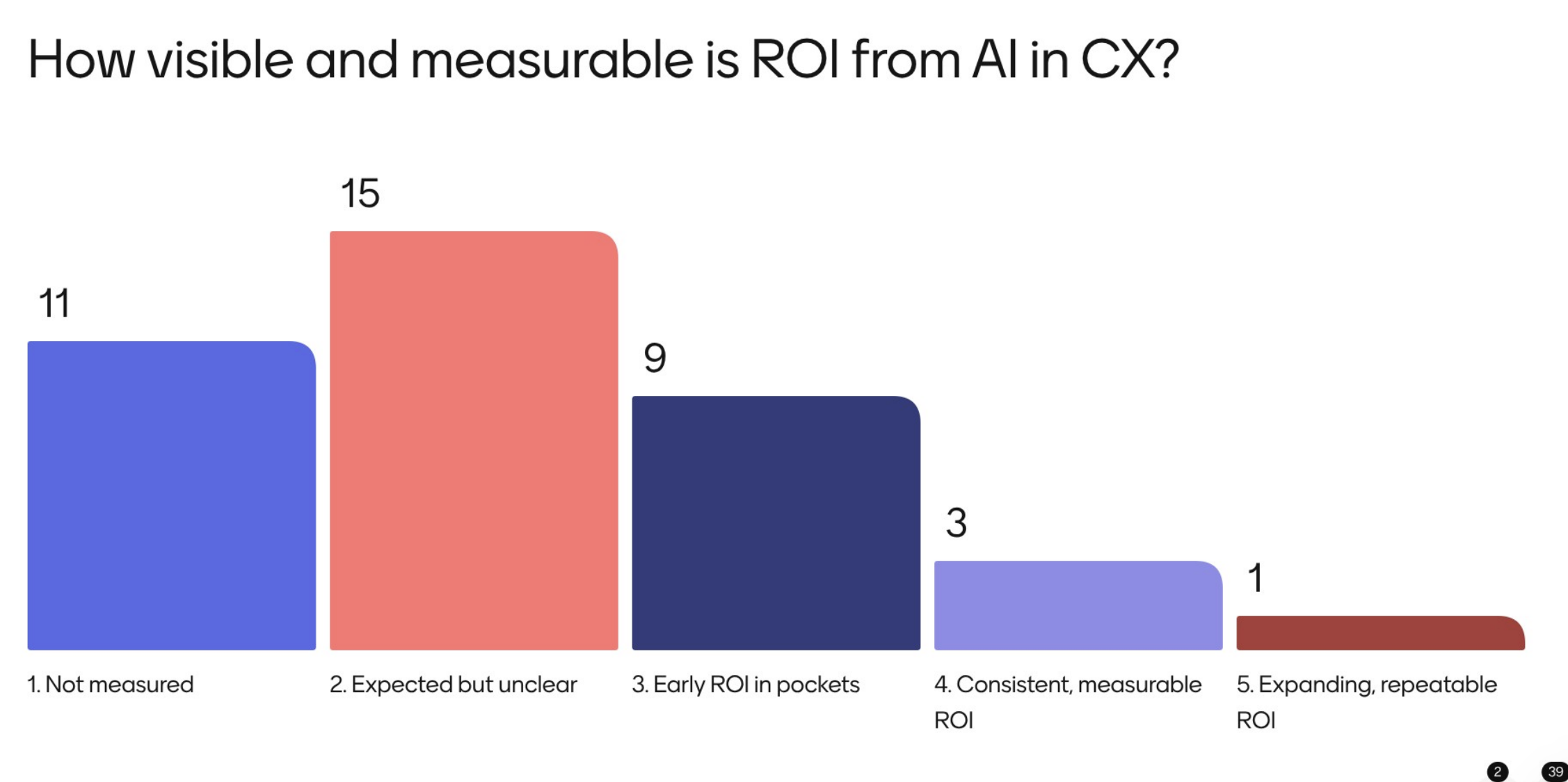

Poll 3: How visible and measurable is ROI from AI in CX?

The most common answer was expected but unclear at about 38%, followed closely by not measured at about 28%. The takeaway is not that ROI is absent. It is that attribution, baselines, and measurement frameworks are still catching up to the pace of investment.

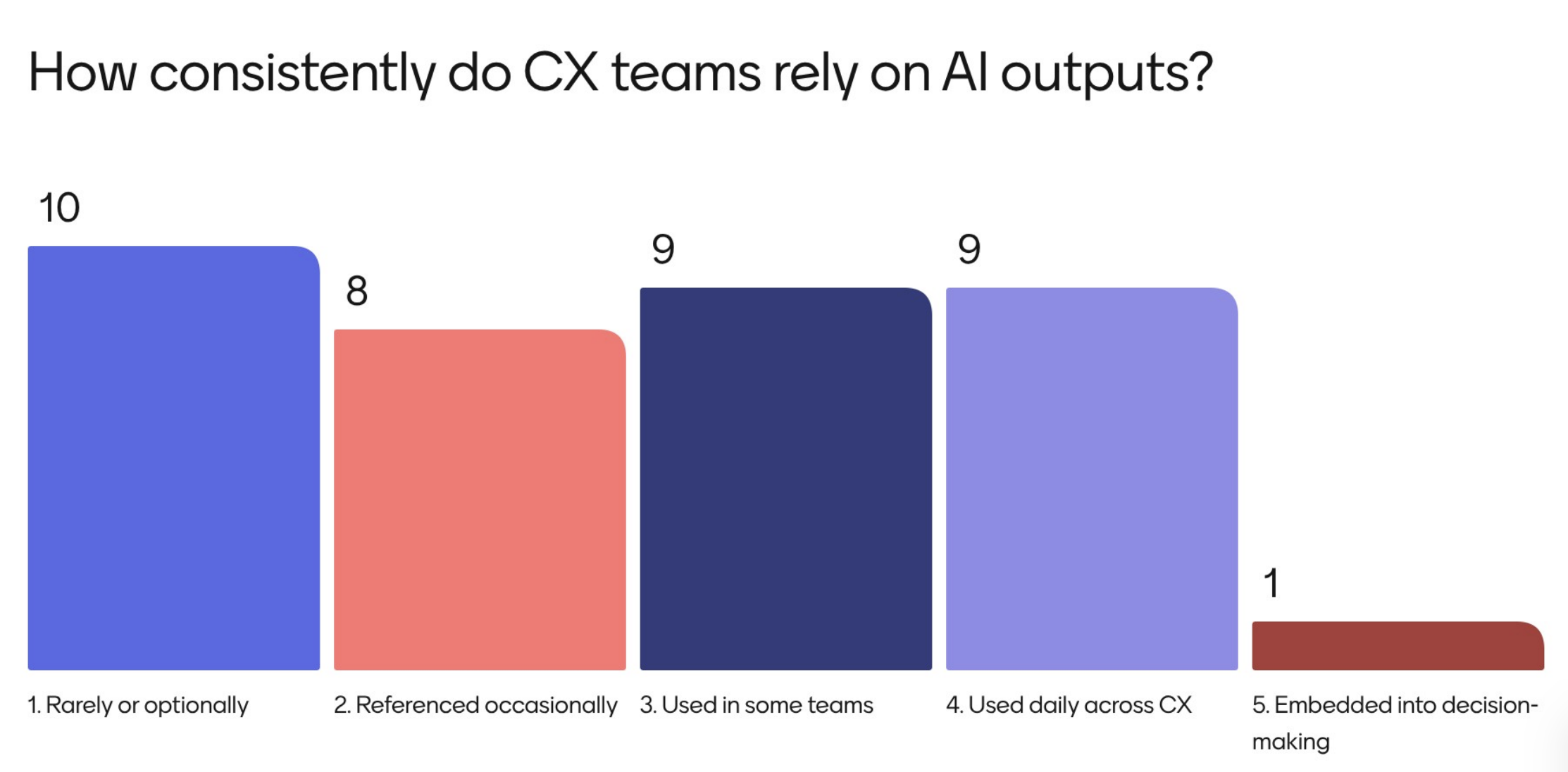

Poll 4: How consistently do CX teams rely on AI outputs?

The largest group, about 27%, said AI outputs are used rarely or optionally, while about 24% said AI outputs are used daily across customer experience. Adoption is happening, but it is uneven, often varying by channel, team, or use case maturity.

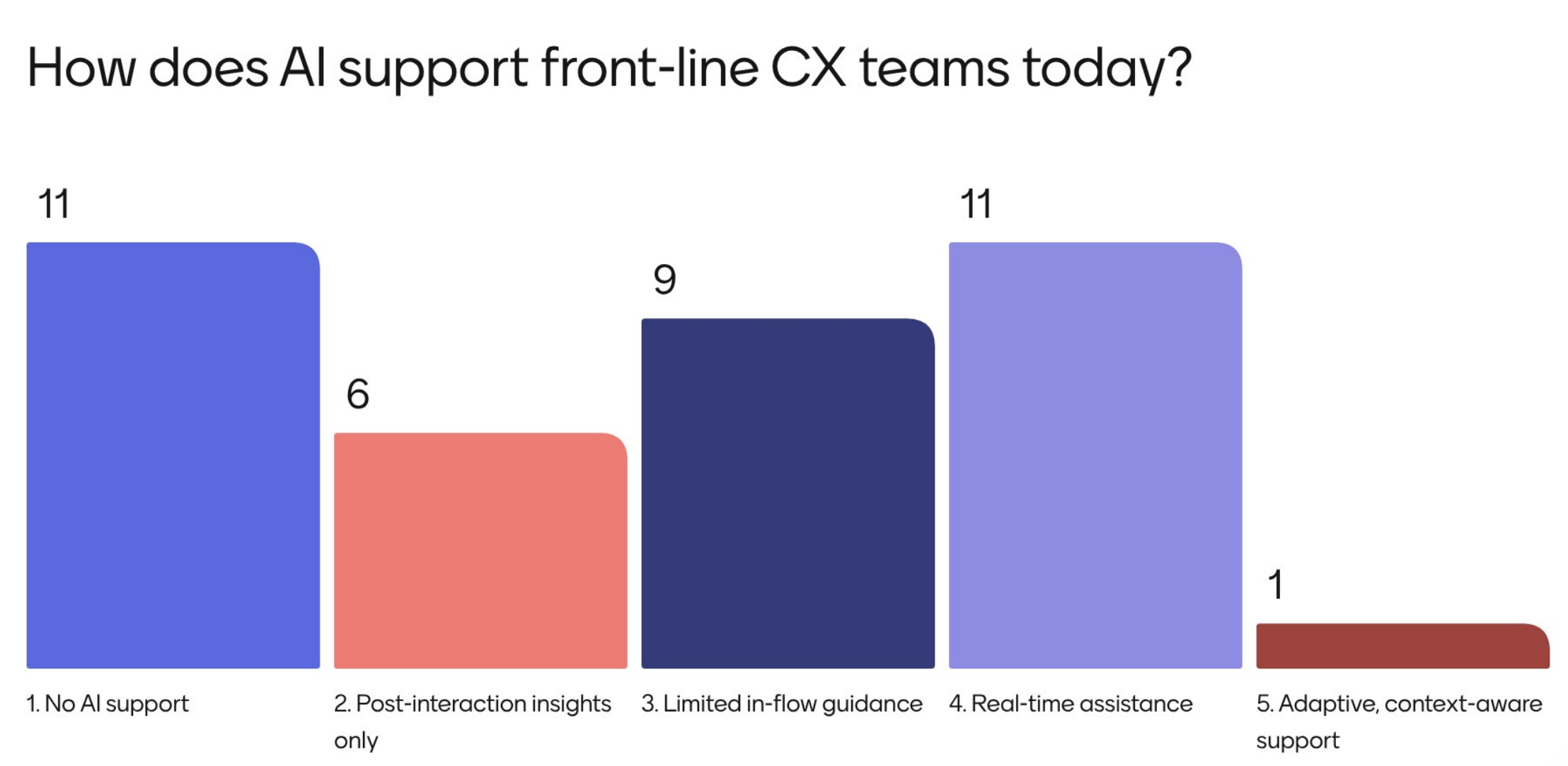

Poll 5: How does AI support front-line CX teams today?

Responses were split at the top: about 29% reported no AI support, and about 29% reported real-time assistance. That spread highlights just how wide the maturity range is, even among organizations actively investing in AI.

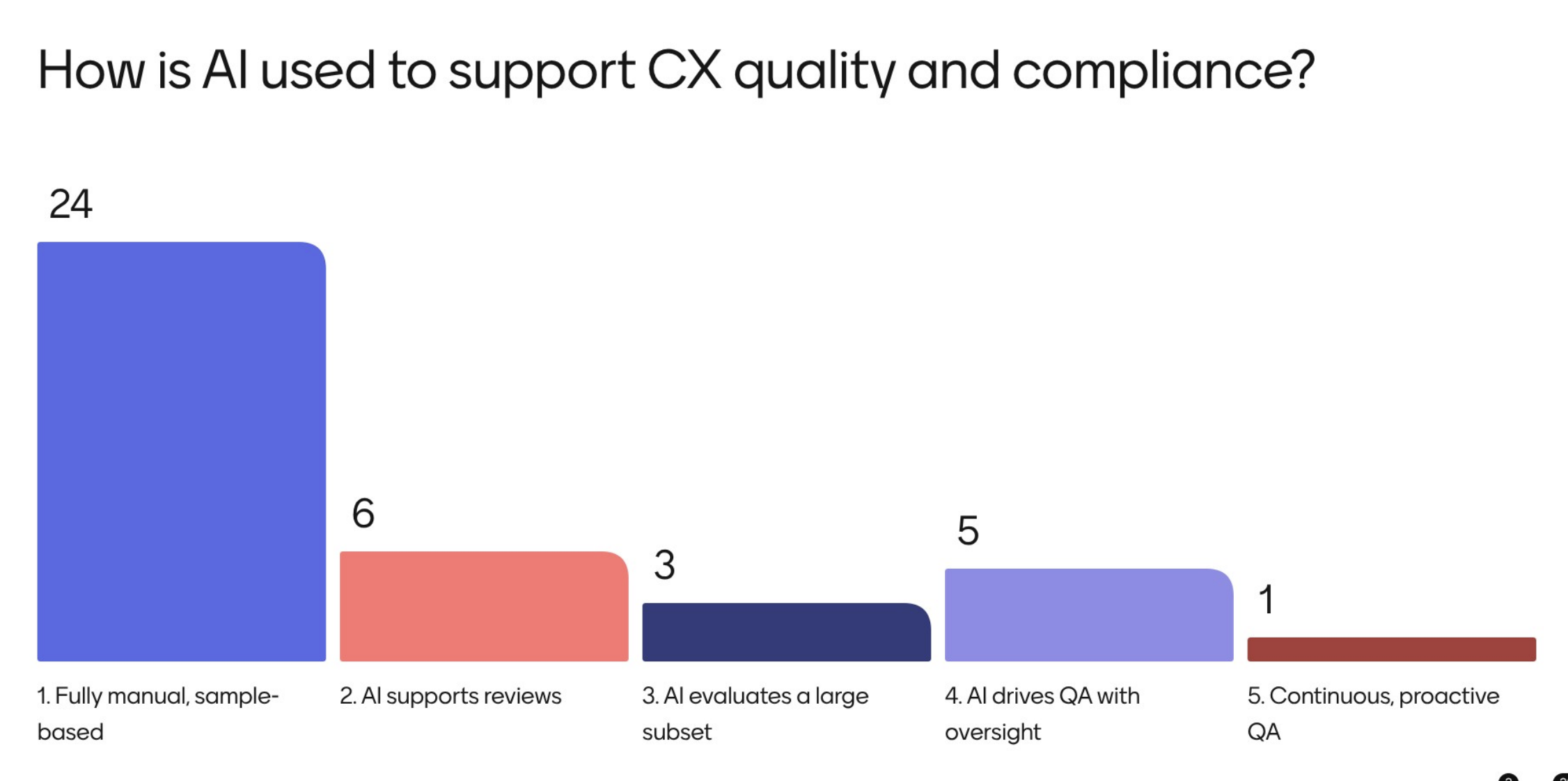

Poll 6: How is AI used to support CX quality and compliance?

This was one of the clearest signals in the room: about 62% said quality and compliance are still fully manual and sample-based. Even as AI expands in other areas, quality programs often remain traditional, which limits speed, coverage, and consistency.

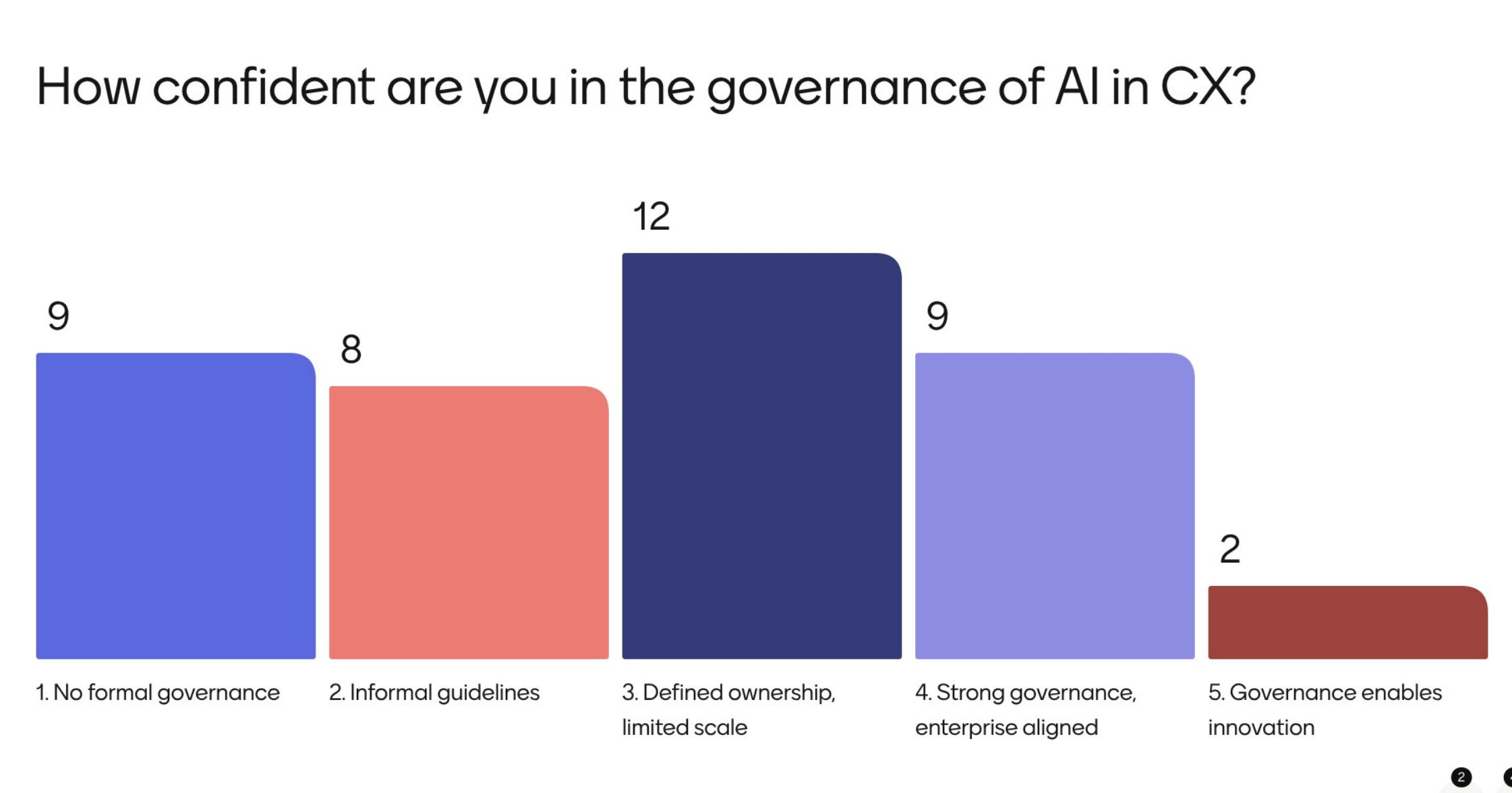

Poll 7: How confident are you in the governance of AI in CX?

The top response was defined ownership with limited scale at about 30%, followed by strong governance aligned to the enterprise at about 22%. Governance is developing, but many organizations are still building the structure needed to scale safely and consistently.

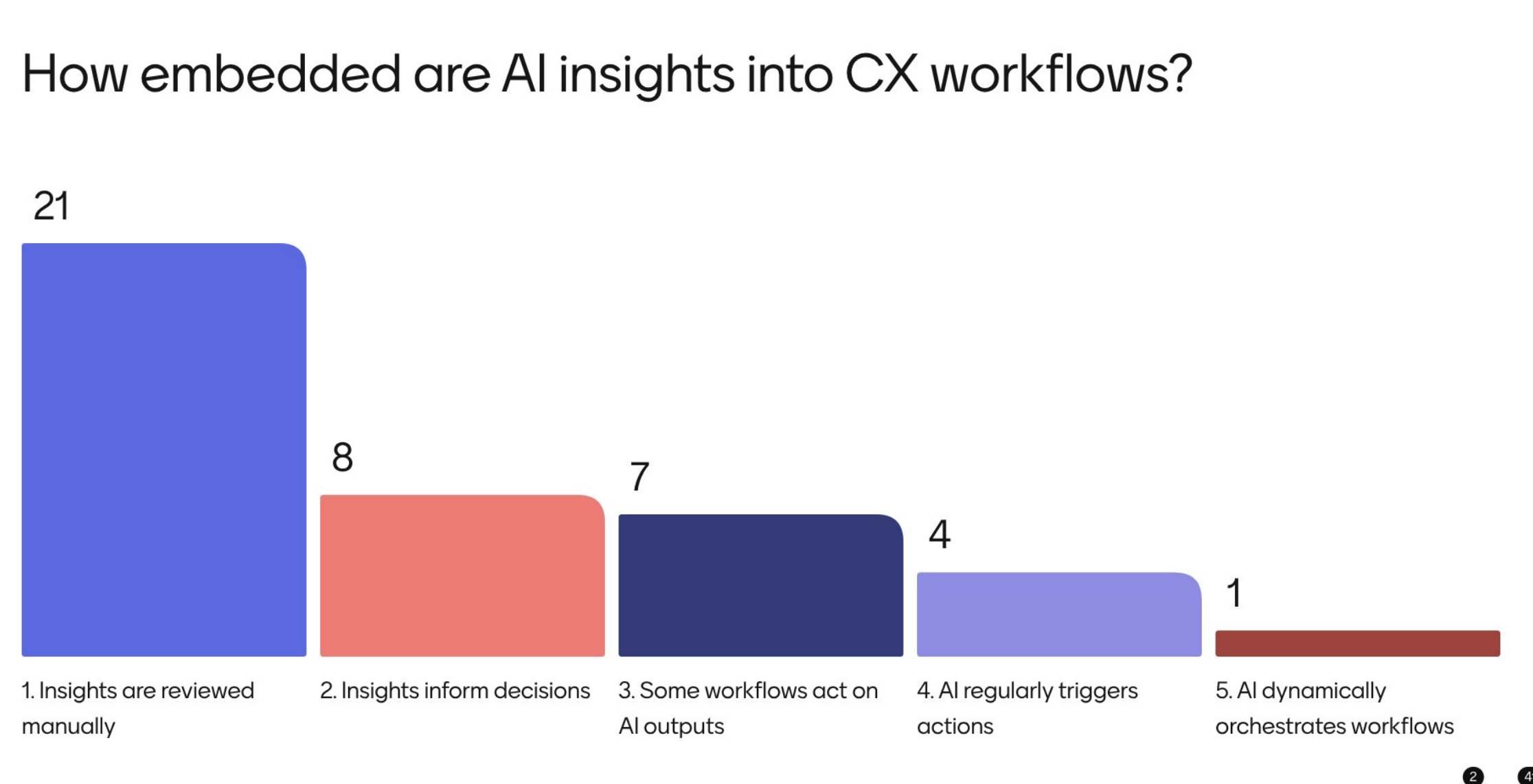

Poll 8: How embedded are AI insights into CX workflows?

A majority, about 51%, said insights are still reviewed manually. This explains why many teams struggle to move faster: if insights do not reliably trigger action, the organization is still doing interpretation and execution by hand.

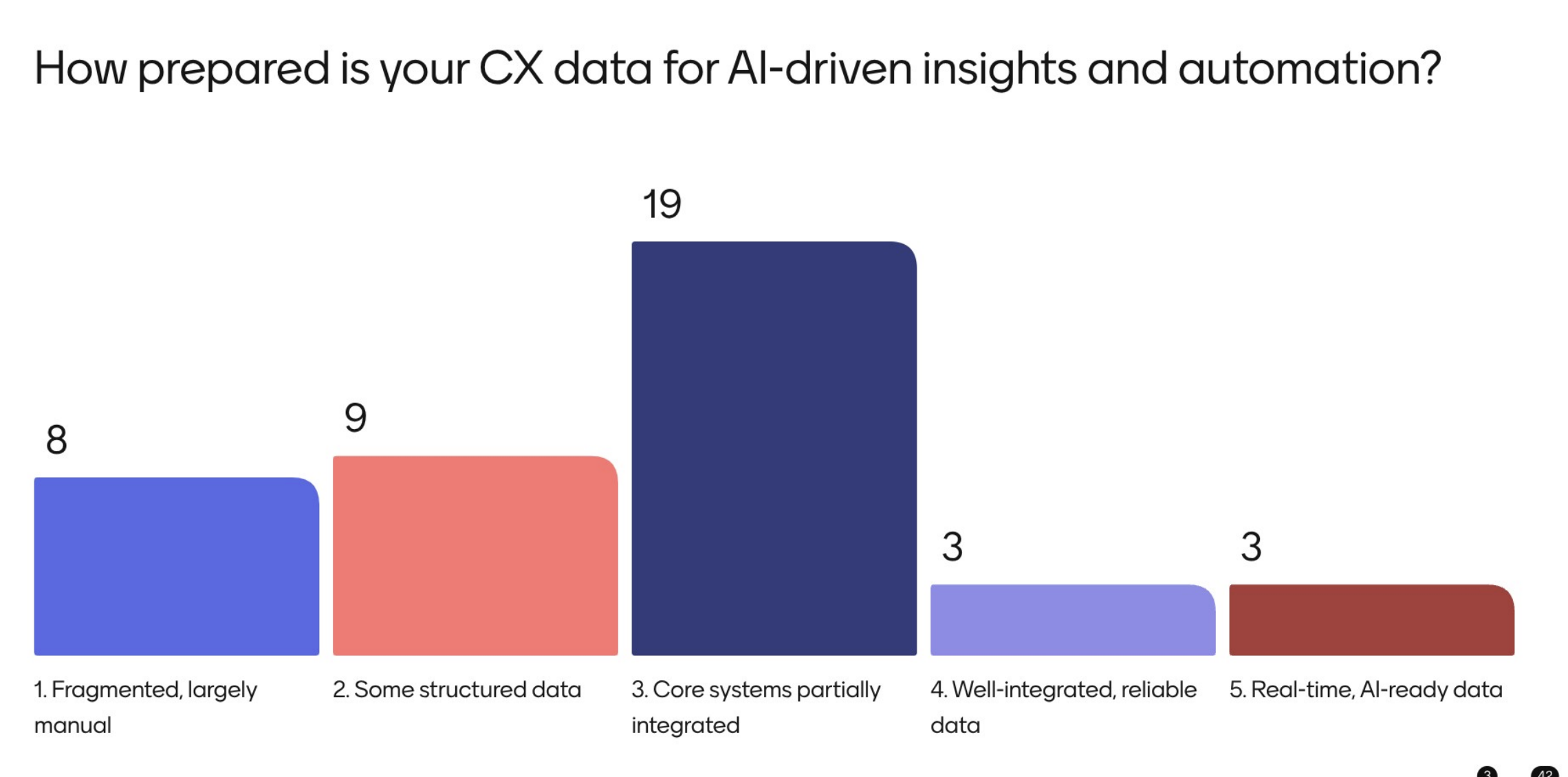

Poll 9: How prepared is your CX data for AI-driven insights and automation?

The largest group, about 45%, reported that core systems are partially integrated. This middle state is common, but it also makes orchestration harder: partial integration often means slower iteration, harder measurement, and fewer closed-loop workflows.

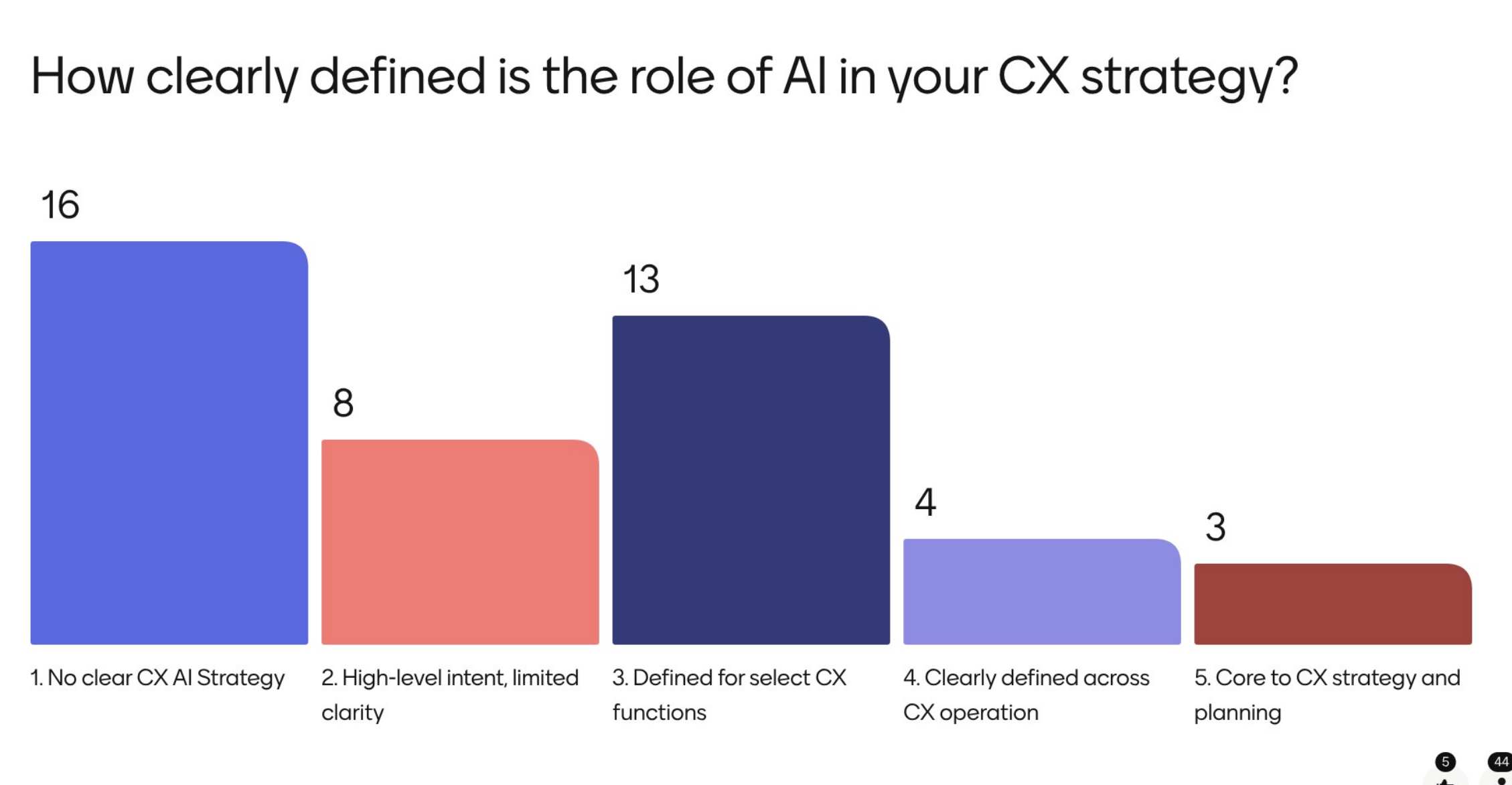

Poll 10: How clearly defined is the role of AI in your CX strategy?

About 36% reported no clear customer experience AI strategy, followed by about 30% who said AI is defined for select functions. Many teams are moving, but the poll suggests plenty are doing it without an enterprise-level definition of what AI is responsible for.

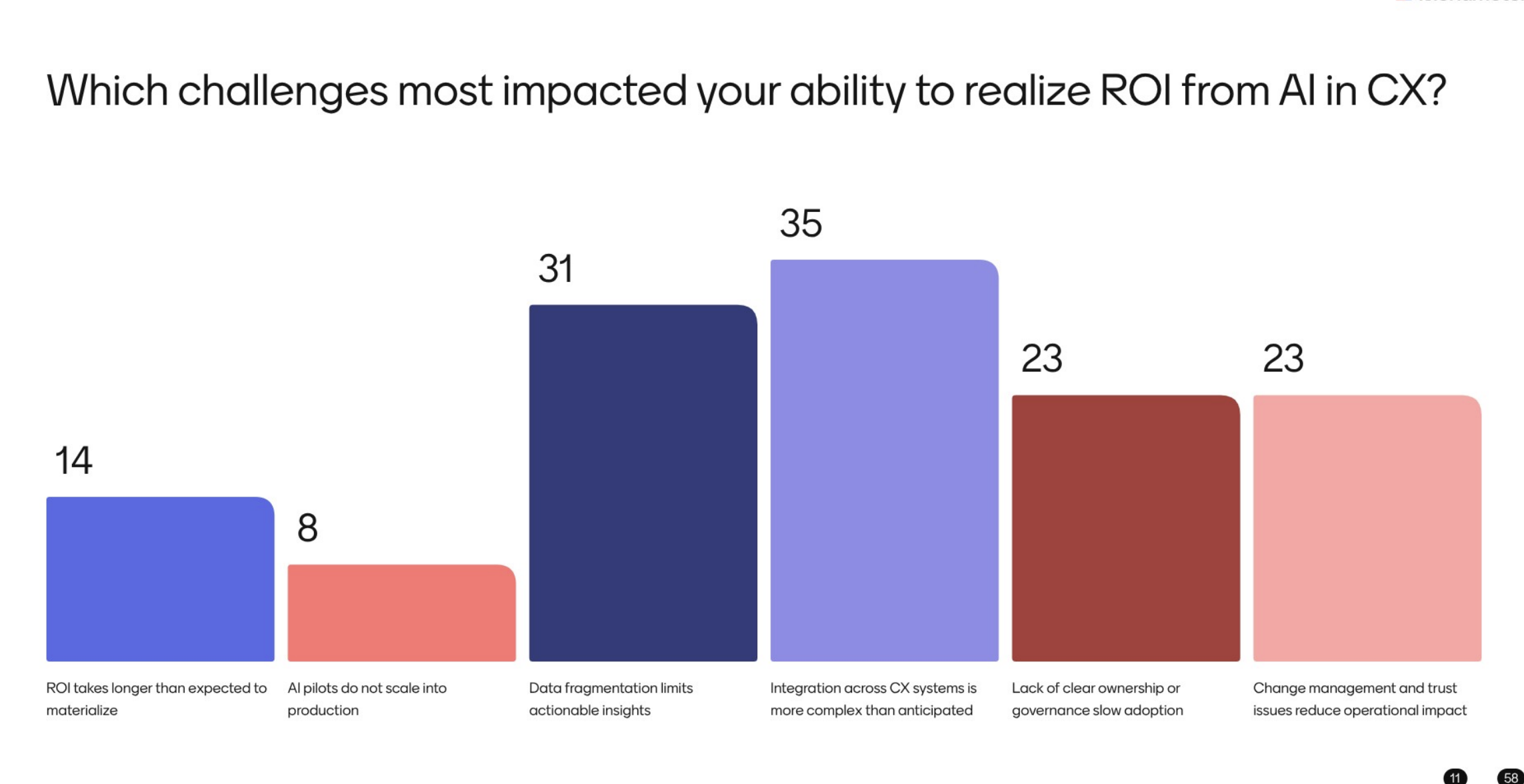

Poll 11: Which challenges most impacted your ability to realize ROI from AI in CX?

The biggest blockers were integration complexity at about 60% and data fragmentation at about 53%. Next was a lack of clear ownership or governance, change management, and trust, both at about 40%.

Fewer respondents cited ROI simply taking longer than expected (about 24%) or pilots failing to reach production (about 14%). The signal is clear: realizing ROI is less about the AI itself and more about the operating system around it, including integration, decision rights, and adoption.

What This Means for Leaders in 2026

Across every poll, one reality stood out: many organizations are in the “middle zone.” AI is active. Experiments are real. Some teams are seeing value. But the path to repeatable, enterprise-ready outcomes still depends on a few fundamentals:

- Clear ownership and governance that support scale

- Integrated data and systems that make measurement possible

- Workflows that turn insights into action, not just dashboards

- Quality programs that evolve beyond sample-based review

- Change management that earns trust across front-line teams

Where Liveops Fits In

At Liveops, this is exactly the gap we are built to close: moving from AI ambition to operational reality, without sacrificing quality, compliance, or customer trust.

Through LiveNexus by Liveops, we help enterprises test, govern, and scale AI and human orchestration in a way that is measurable and accountable. Combined with agents within our network and an operating model designed for precise coverage, we help teams reduce friction, protect performance as volumes shift, and turn insights into outcomes teams can sustain.

If you want to compare where your organization landed in these polls to what “next level maturity” looks like in practice, we would love to share what we are seeing across live programs.

Ready to move from pilots to measurable outcomes?

Talk with a Liveops specialist to benchmark your AI maturity, identify the fastest path to operational impact, and see how LiveNexus by Liveops can help you scale AI and human orchestration with confidence.

Related Resources

Stop outsourcing, start outsmarting

Join the brands redefining customer experience with Liveops. Empathetic agents, tech-powered delivery, and the flexibility to meet every moment. Let’s talk.

Explore flexible customer experience solutions